Plot Twist: DeepSeek Takes on Goliath

Why this changes everything for Africa. Our message is clear: you don't need to outspend the competition; you need to outthink them.

In the News

The Rundown was in the news in the Davos Edition on doing business in Africa offering some perspective on AI and Africa. Here were some thoughts from an interview I did with Africa Business and my friend Omar Ben Yedder. Thank you !

Pressmate

We continue to build our Pressmate MVP which is ready for testing. Please reach out if you would like your press release life to be faster, cheaper, better. This is about making comms teams more efficient, and skilling up, not about replacing.

The $8 a month from our paid subscribers really helps us build and bootstrap. i’d appreciate your financial support to keep newsletters like this going, and allowing us to build new products that make sense for comms teams. Thank you !

Wake Up Call

Silicon Valley just got the wake-up call of the decade. While tech giants were busy flexing their billion-dollar AI muscles, a small research lab in China quietly rewrote the rules of the game. This company has achieved something remarkable: In just two months and with less than $6 million – pocket change by Silicon Valley standards – they've developed an AI model that's outperforming some of the most sophisticated systems from OpenAI, Meta, and Anthropic.

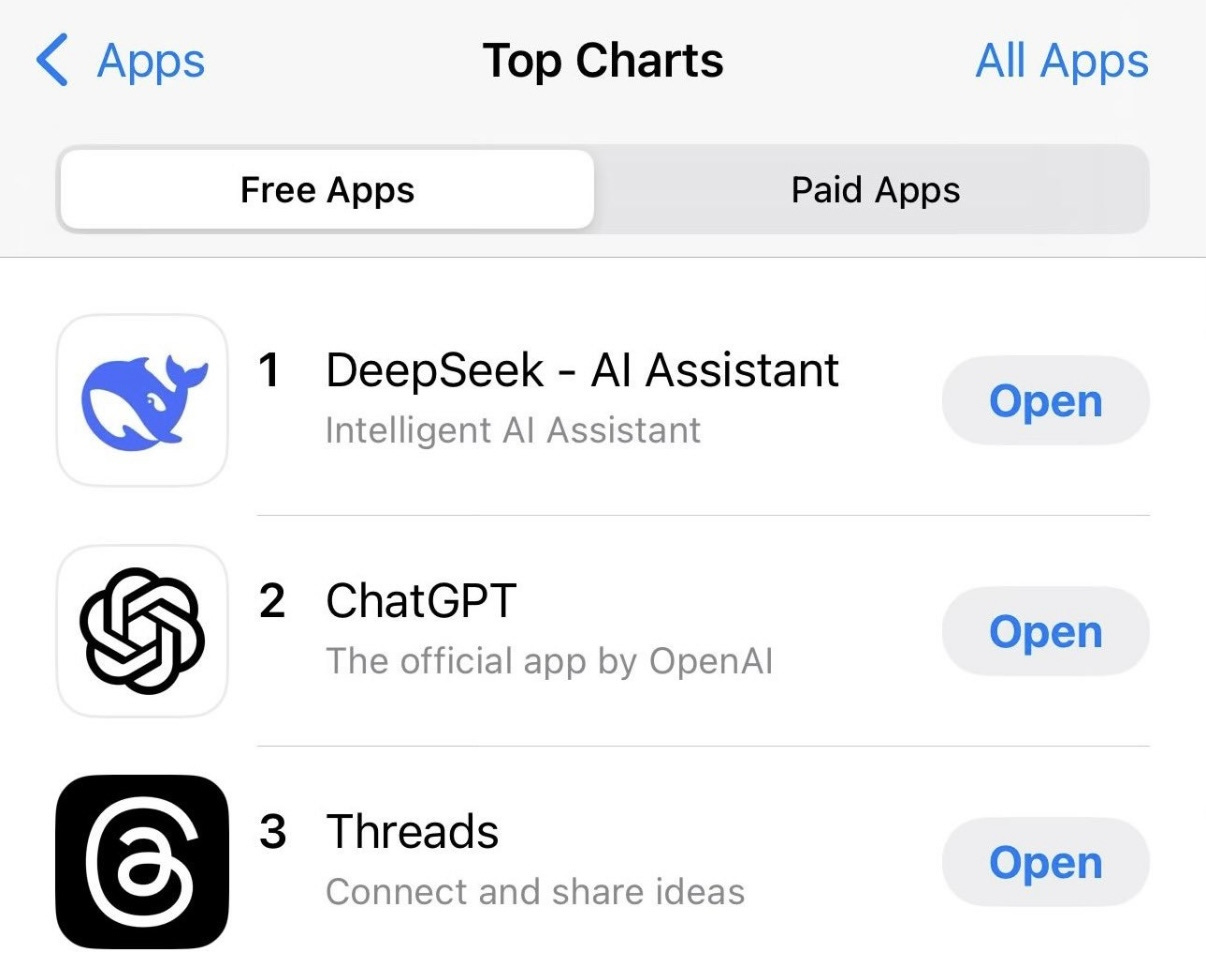

Their model, R1, didn't just compete, it won and at a fraction of the cost. And now it's #1 in the Apple AppStore’s download rankings - even more recent downloads than ChatGPT. The latest model was only launched 5 days ago

Look at these numbers

OpenAI reportedly spent over $100 million training GPT-4

Meta plans to spend $65 billion on AI development in 2025

The Stargate Project represents a $500 billion investment in AI infrastructure

Most leading AI companies use 16,000+ specialized Nvidia chips

Now, here's what DeepSeek did:

The numbers tell a stunning story. While Meta announces plans to spend $65 billion on AI development in 2025, and the Stargate Project commits a mind-boggling $500 billion to AI infrastructure, DeepSeek achieved comparable (and sometimes better) results with just $5.6 million in training costs. Let that sink in people!

$5.6 million in training cost

Uses only about 2,000 specialized chips

API costs 95% lower than competitors

Matches or exceeds performance of major competitors

The US markets tanked on this news

Yikes.

Nvidia's stock dropped 12%. Tech has taken a hit. Why? DeepSeek's success challenges the entire premise that advancing AI requires massive compute and infrastructure, which is what all the big tech companies are investing in

DeepSeek has sparked a $1 trillion rout in US and European technology stocks, as investors questioned bloated valuations from some of America’s biggest companies - Bloomberg

Where do the billions in Silicon Valley go anyway?

Massive executive compensation packages

Expensive office spaces in prime locations

Huge teams with redundant roles

Inefficient use of computational resources

Enormous marketing and PR budgets

Bloated overhead costs to satisfy shareholder expectations

Meanwhile, DeepSeek, with a lean team of less than 200 people, is matching or exceeding the performance of companies with thousands of employees and billions in resources. Something doesn't add up here.

Tough board meetings ahead.

I don’t know how many of you remember this hilarious moment from the Olympics, but if one meme could show how the AI market is doing right now, this is it!

Secret Sauce is Chain of Thought: Thinking Like a Human

Traditional Large Language Models (LLMs) work through what we call supervised fine-tuning – imagine a student being shown problems and solutions, then being tested on similar problems. It's effective, but expensive and resource-intensive.

R1 takes a different approach. Instead of being spoon-fed solutions, it learns through what's called direct peer reinforcement learning. Think of it as giving a student a problem with no solution manual. The student has to figure it out themselves, learning from their mistakes and successes along the way.

This approach creates something remarkable: an AI that actually reasons through problems rather than just pattern-matching from training data. When given a question, R1 develops its own chain of thought, similar to how you or I might work through a complex problem. It even fact-checks itself along the way.

First off, it shows you don't need to be rich to make awesome AI anymore. That means more people around the world can do it now. DeepSeek also did something clever - they let the AI learn on its own without humans helping. That's pretty cool and might make American companies have to step up their game. -

Deep, Deep Seek Dive

Multi-Head Latent Attention (MLA): Think of traditional AI as trying to read a book with a single spotlight. DeepSeek's MLA system is more like having multiple spotlights that can focus on different parts simultaneously. This means their models can identify subtle relationships and handle multiple aspects of a problem at once, leading to more sophisticated responses - much closer to how humans actually process information.

Pure Reinforcement Learning Approach: Instead of just learning from labeled examples (like memorizing a textbook), DeepSeek's models learn through experience and trial-and-error - more like how humans learn. Chain of thought. Their models develop reasoning capabilities by interacting with their environment and receiving feedback

Turning Limitations into Innovations: Here's what's really interesting - faced with U.S. export controls on advanced chips, DeepSeek had to innovate with less powerful and available hardware. Instead of seeing this as a limitation, they turned it into an opportunity to fundamentally rethink AI development efficiency. Their team of less than 200 people managed to achieve what companies with thousands of employees and billions in resources are trying to do.

The real magic here is how DeepSeek combined all these approaches to create something that has completely disrupted the industry. They've proved that advanced AI development doesn't have to mean massive data centers and billion-dollar budgets. It's about smart design, efficient architecture, and innovative thinking.

Why This Changes Everything for Africa

For a very long time we've been told that African countries couldn't develop their own AI because the resource requirements were too high. The narrative was that only wealthy nations or tech giants could afford to play in this space.

DeepSeek just shattered that myth.

At $5.6 million, we're talking about a budget that's within reach of many African national technology initiatives. This isn't just about building AI - it's about data sovereignty, something we've discussed extensively on our Embedded podcast. If a team of less than 200 people in China can build world-class AI with limited resources, imagine what African tech hubs in Nairobi, Lagos, or Cape Town could do.

DeepSeek has shown us that the path to advanced AI doesn't require massive infrastructure - it requires smart design and efficient innovation. For Africa, this could be our moment to leapfrog traditional AI development approaches, just how cheaper mobile phones completely changed the game on the continent.

This could be the catalyst Africa needs to start building its own AI infrastructure, protect its data sovereignty, and ensure our stories, our patterns, and our future are shaped by our own technology. This isn't just about catching up - it's about building AI that understands our contexts, our languages, our needs. DeepSeek has shown us it's possible.

You can read more about this here: A Warning to African Leaders: Do Not Sell Your Data Sets

Or listen to our Embedded episode 4 - Lost in translation: Building AI for Africa’s Language’s

American companies might lose business if everyone starts using this free Chinese AI instead.

The message is clear: you don't need to outspend the competition; you need to outthink them. For innovators around the world, especially in regions that have been told they lack the resources to compete, Deep Seek's achievement is liberating.

The future of AI might not be about who has the biggest budget, but who has the most innovative approach to problem-solving. And that's a future in which everyone can participate.